The Physics of Yield: Why Generative AI is the Wrong Tool for Factory Tuning

The Physics of Yield: Why Generative AI is the Wrong Tool for Factory Tuning

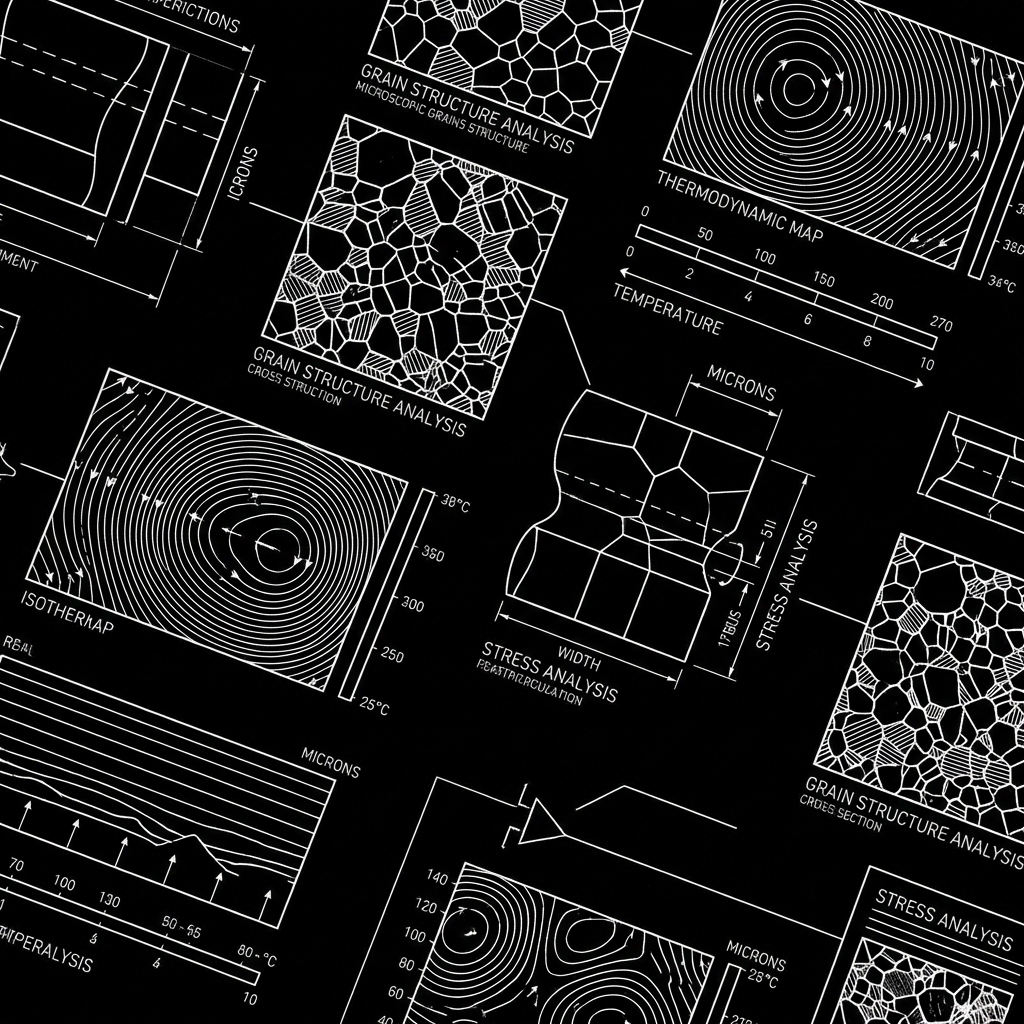

An argument for physical, first-principles modeling over large language models in high-precision manufacturing. Why LLMs fail in the face of thermodynamic reality.

In the wake of the latest generative artificial intelligence wave, a dangerous narrative has taken hold in the executive suites of global manufacturing. It's a seductive promise: a "General Purpose Intelligence" that can ingest every manual, every batch record, and every millisecond of sensor data to "chat" its way to a more efficient plant. Corporate boards, fearful of being left behind, are pushing their operational teams to deploy Large Language Models (LLMs) as the primary engine for process optimization.

At LOCHS RIGEL, we believe this pursuit is not just academically misguided, but operationally hazardous. In the high-stakes world of precision manufacturing—where microns, milliseconds, and thermal gradients determine EBITDA—the factory floor is governed by the unyielding laws of thermodynamics, not the statistical probability of a linguistic string.

// DATA_SOURCE: PRECISION MANUFACTURING YIELD SNAPSHOT // 2025

Determinism > Probability

"The factory floor is a deterministic environment. To optimize it, your AI must be constrained by the same physical constants as your machines."

To solve the modern yield crisis, we don't need "smarter" chatbots; we need a return to First-Principles Intelligence.

The Fundamental Flaw: Stochastic Pigeons in a Deterministic World

To understand why Generative AI (LLMs) is the wrong tool for factory tuning, we must first understand what an LLM actually is. At its core, an LLM is a "stochastic parrot" or, more accurately, a highly sophisticated prediction engine for the next most likely token in a sequence. It is based on correlation, not causation.

In a customer service setting or a marketing brainstorming session, a 3% error rate is an acceptable "edge case." In fact, the "hallucinations" of an AI can sometimes be seen as "creativity" in those contexts. But in an industrial chemical reactor, a high-precision CNC line, or a semiconductor lithography tool, a 3% error (or even a 0.5% error) isn't a "hallucination"—it's an explosion, a scrapped batch, or an existential threat to asset integrity.

Why LLMs Hallucinate in Production:

- Ignorance of Mass and Energy Balance: An LLM has no internal concept of physical conservation laws. It can suggest process parameters (like increasing flow rates while simultaneously decreasing pressure and temperature) that are statistically likely in its training text but physically impossible in a sealed vessel. It doesn't know that what goes in must come out.

- Linguistic vs. Numerical Precision: Industrial tuning requires high-fidelity numerical reasoning. LLMs struggle with "tokenization errors" when handling floating-point numbers. To an LLM, the difference between 0.001 and 0.0001 is a single character change; to a turbine blade, that difference is the difference between successful operation and catastrophic failure. In a turbine blade, that difference is the difference between successful operation and catastrophic failure.

The Hallucination Liability

"A 1% error in a chatbot is a joke; a 1% error in a chemical reactor is a shutdown. LLMs should never govern safety-critical industrial loops."

- Non-Linearity and Causal Chains: Industrial processes are not linear. They possess thermal and mechanical inertia. A change in the cooling jacket temperature at Step 1 may not manifest its effect on the physical product until Step 14. Linguistic models are designed to find patterns in the proximity of data, not the deep, multi-variate causal chains of industrial time-series events.

The Yield Crisis is a Physics Crisis

Modern manufacturing is currently facing a "Yield Crisis." Whether it's the 30% scrap rates in new EV battery Gigafactories or the complex impurities in advanced pharmaceutical biologics, the common denominator is Process Drift.

When a process drifts, traditional AI attempts to solve it by looking purely at history: "What did we do last time the yield fell?" But the factory floor is dynamic. Ambient humidity changes. Raw material purity from the supplier fluctuates. Machine components wear down at varying rates.

If your AI model is purely historical (like a transformer-based LLM), it is always looking in the rearview mirror. It is trying to solve today's physics with yesterday's statistics.

The LOCHS RIGEL Solution: Physics-Informed Intelligence (PII)

To move beyond the hype, we advocate for an architecture that embeds the laws of physics directly into the silicon. This is Physics-Informed Intelligence.

Pillar 1: Physics-Informed Neural Networks (PINNs)

Instead of training a model on "blind" data alone, we train it on Data + Partial Differential Equations (PDEs).

In a PINN, the AI's loss function—the mathematical penalty it receives for being wrong—is constrained by known physical laws. If the AI suggests a setpoint that would violate the Laws of Thermodynamics or Fluid Dynamics, the loss function spikes to infinity. The system literally cannot "hallucinate" a physically impossible state.

The results of this approach are transformative:

- Data Efficiency: While an LLM needs trillions of tokens, a PINN can achieve 99.9% accuracy with 10x to 100x less training data because it already "knows" the rules of the game.

- Edge Stability: Because the model is grounded in physics, it remains stable even when it encounters "Out-of-Distribution" data (scenarios the factory hasn't seen before).

Pillar 2: The "High-Fidelity" Digital Twin

At LOCHS RIGEL, we never deploy an AI model directly to an industrial control loop (PLC). We implement a Validation Sandbox.

Before a suggestion from the AI hits the shop floor, it is passed through a high-fidelity digital twin—a simulator built on classical engineering tools (CFD, FEA). This "Twin" acts as the final sanity check. If the AI suggests a 5% increase in line speed, the Twin simulates the vibrational stress and thermal load. If the simulation predicts a process drift, it vetoes the AI's suggestion.

This is the bridge between "Silicon Valley AI" and "Industrial Reality."

The Execution Roadmap for Operators

If you are an executive responsible for assets worth hundreds of millions of dollars, do not bet your line on a chatbot. Follow this First-Principles Roadmap:

Phase 1: Establish the "Ground Truth" Telemetry

You cannot optimize what you cannot measure accurately. Before investing in AI, ensure your sensors are calibrated and synchronized. If your time-series data is misaligned by even 100 milliseconds, your AI will be trying to correlate events that didn't happen together.

Phase 2: Hire Physicists, Not Just Data Scientists

A Data Scientist can find a correlation in a spreadsheet. A Physicist (or a Controls Engineer) can tell you if that correlation is a causal reality or a statistical fluke. Your "AI Team" must include people who understand the chemistry, the mechanics, and the heat transfer of your specific product.

Phase 3: The Hybrid Control Stack

Use traditional, deterministic automation (PID loops and PLC logic) to keep the plant safe. Use Physics-Informed AI to find the "narrow peaks" of optimal performance within those safety boundaries. Intelligence should be an augmentative layer, not a replacement for the proven logic of the line.

The Walkaway: Three Realities for the Board

As you evaluate your firm's AI spending, keep these three operational truths in mind:

- AI is a Tool, Not a Strategy: An algorithm is only as good as the physical model it sits upon. If your underlying process is poorly understood, no amount of "Intelligence" will fix it.

- Yield is a Physics Game: Every percent of scrap avoided is pure EBITDA. In the battle for yield, the firm that understands the science of its line will always defeat the firm that only understands the "likelihood" of its data.

- Deterministic Stability is Non-Negotiable: In the factory, "consistency" is more valuable than "creativity." Your AI stack must be as predictable and reliable as the steel it controls.

Generative AI will change how you write your reports, how you manage your inbox, and how you search your documentation. But it will not bake your bread, forge your steel, or synthesize your medicine.

The next generation of industrial winners won't be the ones with the largest LLM budgets, but the ones who best bridge the gap between bits and atoms using the indestructible laws of the physical world.

At LOCHS RIGEL, we don't just "talk" to the machines. We engineer the intelligence that understands them.